Introduction

In the information era, data has emerged as a sensitive and valuable asset. This intangible resource encompasses various aspects of human reality, including individual identities, medical records, financial statements, business strategies, and national security information. The misuse of data can have far-reaching consequences, from personal coercion to economic espionage and national security threats. As de Villiers (2010) aptly describes, “information is the lifeblood of modern society” (p. 24).

The proliferation of computerized databases containing sensitive information has increased the risk of data breaches and fraudulent activities. According to a U.S. Government Accountability Office (GAO) report, “the loss of sensitive information can result in substantial harm, embarrassment, and inconvenience to individuals and may lead to identity theft or other fraudulent use of the information” (U.S. GAO, 2008, p. 1). The financial impact of such breaches is significant, with estimated losses associated with identity theft in the United States reaching $49.3 billion in 2006 alone (U.S. GAO, 2008).

This paper examines the importance of data protection, strategies for safeguarding sensitive information, and the ethical and legal challenges associated with data security measures.

The Importance of Protecting Sensitive Data

Sensitive data can be conceived as any information whose compromise with respect to confidentiality, integrity, and availability could adversely affect its owner’s interests. The advancements in data storage technology and retrieval software have facilitated the compilation and maintenance of vast amounts of information about individuals and organizations. However, this high volume of sensitive data has also increased the number of malicious actors interested in accessing information for illegitimate purposes.

Data protection is crucial for preserving the security of:

- Individuals: Personal information can be used for identity theft, financial fraud, or coercion.

- Organizations: Proprietary data and business strategies can be stolen to gain competitive advantages.

- Nations: Sensitive information related to national defense can compromise security if accessed by adversaries.

Strategies for Preventing Data Compromise

To mitigate the risk of data breaches, organizations must implement comprehensive information system controls. The U.S. GAO (2008) recommends focusing on several critical areas:

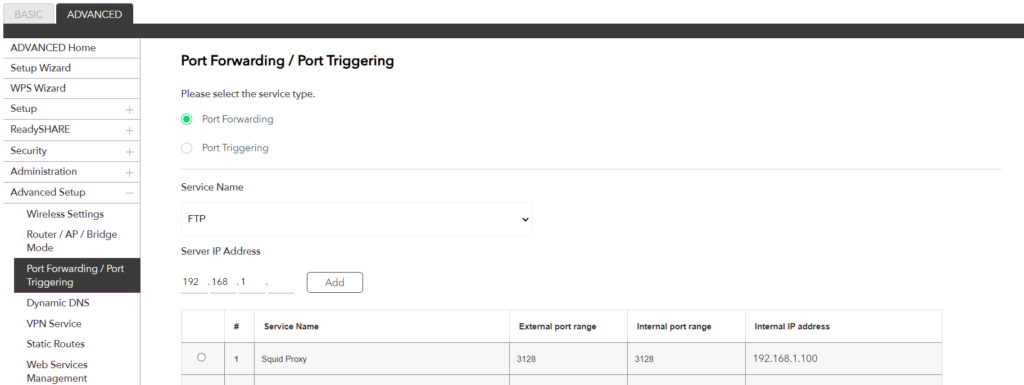

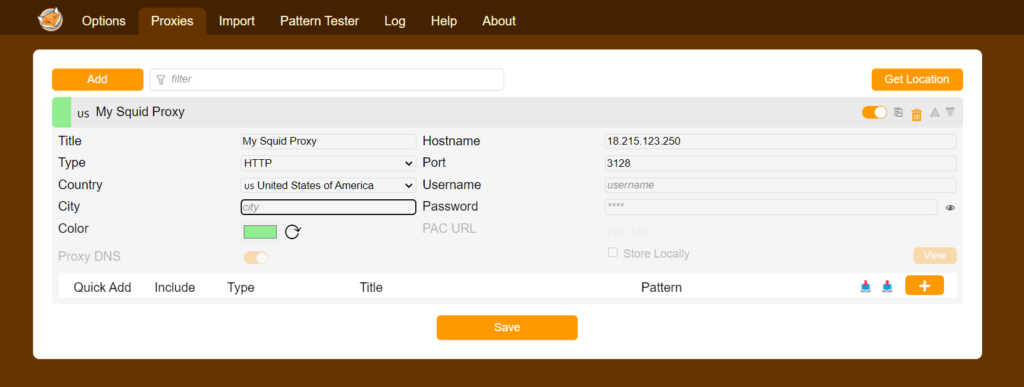

- Access controls: Ensuring only authorized individuals can read, alter, or delete data.

- Configuration management: Assuring that only authorized software programs are implemented.

- Segregation of duties: Reducing risks that one individual can independently perform inappropriate actions without detection.

- Continuity of operations: Developing strategies to prevent significant disruptions of computer-dependent operations.

- Agency-wide information security programs: Establishing frameworks for ensuring that risks are understood and effective controls are properly implemented.

The Growing Threat of Internal Attacks

While external threats remain a concern, internal attacks have emerged as a significant risk to data security. Industry surveys suggest that “a substantial portion of computer security incidents are due to the intentional actions of legitimate users” (D’Arcy & Hovav, 2007, p. 113). A 2002 study by Vista Research estimated that 70% of security breaches involving losses exceeding $100,000 were internal, often perpetrated by disgruntled employees (D’Arcy & Hovav, 2007).

Herath and Wijayanayake (2009) define insider threat as “the intentional disruptive, unethical or illegal behavior by individuals with substantial internal access to the organization’s information assets” (p. 260). These internal breaches not only result in financial losses but can also lead to competitive disadvantages and loss of customer confidence.

To address this growing concern, D’Arcy and Hovav (2007) recommend implementing a combination of procedural and technical controls, including:

- Security policy statements

- Acceptable usage guidelines

- Security awareness education and training

- Biometric devices

- Filtering and monitoring software

Privacy and Security: Balancing Organizational Needs and Individual Rights

The implementation of data protection measures, particularly those aimed at preventing internal attacks, raises significant ethical and legal concerns. While employers have a legitimate right to protect their assets and avoid potential litigation, this protection must be established in accordance with the law and with respect for individual privacy rights.

The right to privacy is enshrined in the Universal Declaration of Human Rights (1948) and protected by the Fourth Amendment of the United States Constitution. However, as Bupp (2001) notes, “industry executives, government officials, technologists, and activists are struggling with issues of security and privacy, attempting to balance the needs of citizens against those of business” (p. 70).

In the context of workplace monitoring, courts have generally held that since employers own the computers, they can make the rules for their use (Bupp, 2001). However, Friedman and Reed (2007) caution that “employers need to consider the effect such monitoring has on their employees because employee and employer attitudes about monitoring often diverge” (p. 75).

The Interconnected Nature of Privacy

Pickering (2008) offers a nuanced perspective on privacy, interconnecting it with other fundamental values:

- Security: Privacy is crucial for physical security; without protection against invasion, individuals may feel constantly at risk.

- Liberty: Privacy and liberty can be synonymous, particularly in the context of freedom from unwarranted governmental intrusions.

- Intimacy: Control over personal information is essential for establishing and maintaining intimate relationships.

- Dignity: Privacy violations can infringe upon personal dignity, especially when involving non-consensual use of personal information or images.

- Identity: Privacy choices reflect how individuals think of themselves and present themselves to others.

- Equality: The right amount and kind of privacy is critical to ensuring equality, particularly for marginalized groups.

Legal and Ethical Implications

The protection of sensitive data, while necessary, can lead to tortious conduct involving invasion of privacy. Nemeth (2005) advises organizations to be mindful of potential civil and criminal liabilities when conducting investigations that may violate privacy. He recommends several guidelines, including:

- Avoiding the use of force or verbal intimidation in investigations

- Collecting and disclosing personal information only to the extent necessary

- Informing subjects of disclosures to the greatest extent possible

- Avoiding the use of pretext interviews and advanced technology surveillance devices when possible

- Training employees in privacy safeguards

Conclusion

In the information age, data protection has become a critical concern for individuals, organizations, and nations. While robust security measures are essential to prevent data breaches and misuse, these measures must be implemented with careful consideration of ethical and legal implications. The challenge lies in striking a balance between organizational security needs and individual privacy rights, recognizing the interconnected nature of privacy, security, liberty, and other fundamental values in the digital era.

As technology continues to evolve, so too must our approaches to data protection and privacy. Future research should focus on developing more sophisticated, ethical, and legally compliant methods of safeguarding sensitive information while respecting individual rights and fostering a culture of trust and transparency in the digital realm.

References

Beeson, A. (1996). Privacy in cyberspace: Is your e-mail safe from the boss, the sysop, the hackers, and the cops? American Civil Liberties Union. [Note: URL not available]

Bupp, N. (2001). Big brother and big boss are watching you. Working USA, 5(2), 69-81.

D’Arcy, J., & Hovav, A. (2007). Deterring internal information systems misuse. Communications of the ACM, 50(10), 113-117.

de Villiers, M. (2010). Information security standards and liability. Journal of Internet Law, 13(7), 24-33.

Friedman, B., & Reed, L. (2007). Workplace privacy: Employee relations and legal implications of monitoring employee e-mail use. Employee Responsibilities and Rights Journal, 19(2), 75-83.

Goldberg, J., & Zipursky, B. (2010). Torts as wrongs. Texas Law Review, 88(5), 917-986.

Herath, H. M. P. S., & Wijayanayake, W. M. K. O. (2009). Computer misuse in the workplace. Journal of Business Continuity and Emergency Planning, 3(3), 259-270.

Nemeth, C. (2005). Private security and the law. Elsevier.

Pickering, F. L. (2008). Privacy and confidentiality: The importance of context. Monist, 91(1), 52-67.

United States Government Accountability Office. (2008). Information security: Protecting personally identifiable information (GAO-08-343). https://www.gao.gov/assets/gao-08-343.pdf